Checkpoint 2

Last Updated: Jan 20, 2026

During the SLAM part of the lab, you will build an increasingly sophisticated algorithm for mapping and localizing in the environment. You will begin by constructing an occupancy grid using known poses. Following that, you’ll implement Monte Carlo Localization in a known map. Finally, you will put each of these pieces together to create a full SLAM system.

Contents

- Contents

- Design Lab

- Task 2.1 Mapping

- Task 2.2 Localization

- Task 2.3 Simultaneous Localization and Mapping (SLAM)

- Checkpoint Submission

Design Lab

We’ve released the Design Lab Guide!

You need to design and build a forklift mechanism. The design file is due with your Checkpoint 2 submission, but it doesn’t need to be final, a prototype version is perfectly fine. The goal is to help you stay on track.

Designing, prototyping, and testing should continue alongside the main checkpoints until the final competition.

Task 2.1 Mapping

In this task, you will implement odometry-based occupancy grid mapping.

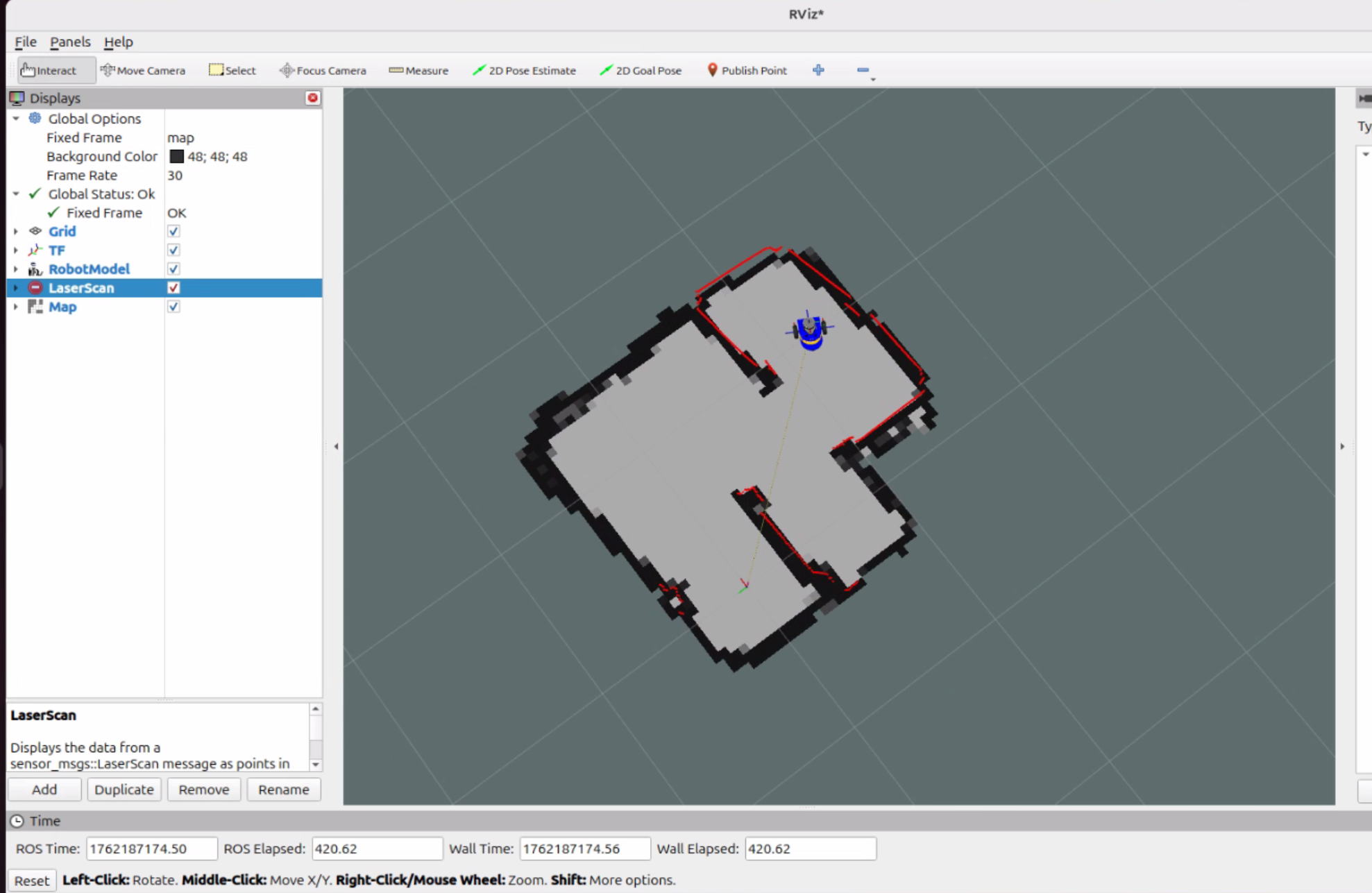

There is a demo video below shows the workflow after completing all TODOs in the template code. It demonstrates how to map and how to view your map in RViz or Foxglove Studio.

TODO

- Check the ROB550 GitLab

mbot_ros_labsupstream to see if there is any new commits to pull. - Install Foxglove Bridge. We will introduce Foxglove Studio, a web-based visualization tool in this task, as an alternative to NoMachine.

sudo apt install ros-$ROS_DISTRO-foxglove-bridge - All work for this task is in the package

mbot_mapping. Start withmapping_node.cpp, search for TODOs. - When finished, compile your code:

cd ~/mbot_ros_labs colcon build --packages-select mbot_mapping source install/setup.bash- Important: You must source the workspace in every relevant terminal after each build. If you don’t, ROS will keep using the old code, and your changes will not take effect.

Explanation of TODOs

You have 3 major tasks in this task:

- Lidar ray interpolation

- A laser scan isn’t instantaneous, the sensor rotates over a finite time interval, the robot’s motion causes spatial distortion. If the robot is moving while scanning:

- The initial beam is captured at start_pose (e.g., x=1.0, y=0).

- The last beam is captured at end_pose say (e.g., x=1.1, y=0).

- According to the message definition of sensor_msgs/LaserScan Message, “timestamp in the header is the acquisition time of the first ray in the scan.” This means the odometry queried by the following line corresponds to the robot’s pose when the first LiDAR ray was fired:

geometry_msgs::msg::TransformStamped tf_odom_base = tf_buffer_->lookupTransform("odom", "base_footprint", scan->header.stamp); - To account for this, the function

MovingLaserScan::interpolateRayinmoving_laser_scan.cppestimates the specific robot pose for each individual beam, effectively “deskewing” the scan data. In the functionMovingLaserScan scan(*msg, current_pose, current_pose_end);, which callsinterpolateRay(scan, start_pose, end_pose);- The start pose should be estimate pose.

- The end pose should be computed based on the scan duration and the robot’s motion.

- A laser scan isn’t instantaneous, the sensor rotates over a finite time interval, the robot’s motion causes spatial distortion. If the robot is moving while scanning:

- Bresenham’s algorithm

- After interpolating all rays, use Bresenham’s algorithm to determine which cells in the occupancy grid each ray passes through.

- Update map cells

- For each beam, update the corresponding cells using log-odds to reflect occupancy probabilities.

How to test?

- Run the following in VSCode Terminal #1 to launch the lidar driver, robot tf tree, robot model:

ros2 launch mbot_bringup mbot_bringup.launch.py - Run the following in the VSCode Terminal #2 to start the mapping node:

cd ~/mbot_ros_labs source install/setup.bash ros2 launch mbot_mapping mapping.launch.py - Using teleop control to move the robot in the maze, run in VSCode Terminal #3:

ros2 run teleop_twist_keyboard teleop_twist_keyboard - Visualize the progress:

- Option 1: In RViz (via NoMachine), details see the demo video.

rviz2 - Option 2: In Foxglove Studio:

# Start the ROS2-Foxglove bridge: ros2 launch foxglove_bridge foxglove_bridge_launch.xml- You can now visualize topics in Foxglove Studio. Details see the demo video.

- Option 1: In RViz (via NoMachine), details see the demo video.

- After mapping the whole area, do not stop the mapping node yet. Save the map in VSCode Terminal #4:

cd ~/mbot_ros_labs/src/mbot_mapping/maps ros2 run nav2_map_server map_saver_cli -f map_name- Replace

map_namewith the name you want for your map. - After saving, you can stop the mapping node.

- Replace

- To view the map:

- Option 1: Use a local viewer. Install a VSCode extension like PGM Viewer to open your map file.

- Option 2: Publish the map in ROS2

cd ~/mbot_ros_labs # we need to re-compile so the launch file can find the newly saved map colcon build --packages-select mbot_mapping source install/setup.bash ros2 launch mbot_mapping view_map.launch.py map_name:=map_name- This will publish the map to the

/maptopic. - You can now view it in RViz (via NoMachine) or Foxglove Studio.

- This will publish the map to the

Expected Result:

Demo Video

Include a screenshot of your map. Analyze the map quality and explain why it is not ideal.

Task 2.2 Localization

Monte Carlo Localization (MCL) is a particle-filter-based localization algorithm. To implement MCL, you need three key components:

- Action model: predicts the robot’s next pose.

- Sensor model: calculates the likelihood of a pose given a sensor measurement.

- Particle filter functions: draw samples, normalize particle weights, and compute the weighted mean pose.

There is a demo video below shows the workflow after completing all TODOs in the template code. It demonstrates how to play the ros bag and visualize localization progress in RViz or Foxglove Studio.

TODO

- All work for this task is in the package

mbot_localization. Start withlocalization_node.cpp, search for TODOs. - When finished, compile your code:

cd ~/mbot_ros_labs colcon build --packages-select mbot_localization source install/setup.bash- Important: You must source the workspace in every relevant terminal after each build. If you don’t, ROS will keep using the old code, and your changes will not take effect.

Explanation of TODOs

Starting from localization_node.cpp, you have 2 main TODOs:

- Construct the obstacle distance grid in

obstacle_distance_grid.cpp. Pre-compute how far each cell is from the nearest obstacle. This will be used in the sensor model. - Complete the particle filter (more complex)

- Resample particles – resample particles based on their weights.

- Apply the action model – move the particles according to odometry; add noise.

- Apply the sensor model – update each particle’s weight based on how well its predicted sensor readings match the actual laser scan. Here we can use the pre-computed obstacle distance grid to calculate these likelihoods.

- Estimate the new pose – compute the weighted mean of the particles based on the posterior distribution.

How to test?

For task 2.2, we provide a rosbag because this task focuses on localization only, and we need a good map for that. Using the bag saves time and lets you work anywhere. All the data required for this task comes from the ROS bag, you DO NOT need to manually publish anything, and DO NOT need to run ros2 launch mbot_bringup mbot_bringup.launch.py.

# You can use the following command to check what is in the bag

$ cd ~/mbot_ros_labs/src/mbot_rosbags

$ ros2 bag info maze1

/amcl_poseserves as the reference pose, it is the “ground truth” you can use to compare against your estimated pose.

- This ROS bag includes the

/cmd_veltopic, unplug the Type-C cable from the Pi to the Pico to prevent the robot from driving away. - Run RViz on NoMachine using provided rviz config file:

cd ~/mbot_ros_labs/src/mbot_localization/rviz ros2 run rviz2 rviz2 -d localization.rviz - Run the localization node in the VSCode Terminal #1:

cd ~/mbot_ros_labs source install/setup.bash ros2 run mbot_localization localization_node --ros-args -p publish_tf:=false - Play the ROS bag in the VSCode Terminal #2:

cd ~/mbot_ros_labs/src/mbot_rosbags/maze1 ros2 bag play maze1.mcap

Demo Video

1) Report in a table the time it takes to update the particle filter for 100, 500 and 1000 particles. Estimate the maximum number of particles your filter can support running at 10Hz.

2) Include a screenshot comparing your estimated pose path with the reference pose path.

Task 2.3 Simultaneous Localization and Mapping (SLAM)

You have now implemented mapping using known poses and localization using a known map. You can now implement the following simple SLAM algorithm:

- Use the first laser scan received to construct an initial map.

- For subsequent laser scans:

- Localize using the map from the previous iteration.

- Update the map using the pose estimate from your localization.

TODO

- All work for this task is in the package

mbot_slam. Start withslam_node.cpp, search for TODOs.- Most TODOs come from Task 2.1 and Task 2.2. You can simply copy and paste your code from those tasks, tune the parameters, and achieve a good working SLAM system. You don’t need to follow the TODOs strictly, feel free to implement them in your own preferred way.

- When finished, compile your code:

cd ~/mbot_ros_labs colcon build --packages-select mbot_slam source install/setup.bash- Important: You must source the workspace in every relevant terminal after each build. If you don’t, ROS will keep using the old code, and your changes will not take effect.

Note: The LiDAR is physically mounted backward, resulting in a 3.14 rad yaw offset between the base_footprint frame and the lidar frame. When projecting LiDAR data into the world frame, be sure to account for this offset.

- This offset is defined in the URDF file located at:

~/mbot_ws/src/mbot_description/urdf - The TF tree is structured as follows:

map → odom → base_footprint → base_link → lidar_link. When you query the transformodom → base_footprint, the system does not include the relationship betweenbase_footprint → lidar_link, we have to manually add the offset. When you queryodom → lidar_link, the yaw offset is already included, so no manual adjustment is needed.

Hints for parameter tuning:

If your mapping and localization are working well, but your full SLAM system is struggling, the problem is often in parameter tuning. Here are some hints to guide your tuning:

- Action model

action_model.hpp- Observe your particle cloud: What is its shape? Is it a tight circle, or an elongated oval following the robot’s path? If

k2_ork1_is too high, your particle cloud might be a “long oval”. - We know the robot’s odometry isn’t perfect, but it’s also not completely wrong. Is a large noise value like 1.0 necessary, or would a much smaller value like 0.001 be more appropriate? Think about how much you trust the odometry.

- Observe your particle cloud: What is its shape? Is it a tight circle, or an elongated oval following the robot’s path? If

- Sensor model

sensor_model.hppsigma_hit_is in meters. If your map resolution is 0.05m (5cm) and yoursigma_hit_is also 0.05m, what does that imply? It means a lidar ray landing one cell away from the obstacle in the map is already at one standard deviation of error.- A smaller

sigma_hit_makes your sensor model stricter, demanding scans align almost perfectly. A larger value is more forgiving. Given the map resolution, experiment with values larger or smaller than 0.05m to see how it affects particle weights.

- Mapping

mapping.hpp- Do you see cells “flickering” between free and occupied? This can happen if a few bad scans can erase a well-defined wall. Tune the log-odds limits: Consider tunning

kMaxLogOddsandkMinLogOdds. to make it harder for a cell’s state to be “flipped” after it’s been confidently marked as occupied or free.

- Do you see cells “flickering” between free and occupied? This can happen if a few bad scans can erase a well-defined wall. Tune the log-odds limits: Consider tunning

- Map resolution

slam_node.cpp- High-resolution maps help localization. Your Task 2.2 may have worked well because the provided map was “crispy” (e.g., 0.01m resolution). If you are using a coarser resolution like 0.05m, try decreasing the cell size (e.g., to 0.03m or 0.02m) here

grid_(10.0, 10.0, 0.02, -5.0, -5.0).

- High-resolution maps help localization. Your Task 2.2 may have worked well because the provided map was “crispy” (e.g., 0.01m resolution). If you are using a coarser resolution like 0.05m, try decreasing the cell size (e.g., to 0.03m or 0.02m) here

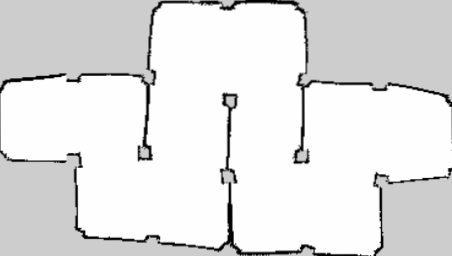

Here are examples of “OK,” “Good,” and “Excellent” maps generated from the slam_test bag. These are related to how competition scoring will be evaluated.

How to test?

- Run the following in VSCode Terminal #1:

ros2 launch mbot_bringup mbot_bringup.launch.py - Put the robot in the maze and start the SLAM Node in VSCode Terminal #2:

cd ~/mbot_ros_labs source install/setup.bash ros2 run mbot_slam slam_node - Start Rviz on NoMachine:

cd ~/mbot_ros_labs/src/mbot_slam/rviz ros2 run rviz2 rviz2 -d slam.rviz - Map in the lab maze, drive the robot manually using the teleop in VSCode Terminal #3:

ros2 run teleop_twist_keyboard teleop_twist_keyboard - After mapping the whole area, do not stop the mapping node yet. Save the map in VSCode Terminal #4:

cd ~/mbot_ros_labs/src/mbot_slam/maps ros2 run nav2_map_server map_saver_cli -f map_name- Replace

map_namewith the name you want for your map.

- Replace

Alternatively, you can work from anywhere using the provided rosbag: 1) first start slam_node, then launch rviz for visualization, finally play the rosbag:

cd ~/mbot_ros_labs/src/mbot_rosbags

ros2 bag play slam_test

- This ROS bag includes the

/cmd_veltopic. Unplug the Type-C cable from the Pi to the Pico before run the ros bag.

1) Create a block diagram showing how the SLAM system components interact.

2) Include a screenshot of your map, and explain why your SLAM-generated map is better than the one from Task 2.1.

Checkpoint Submission

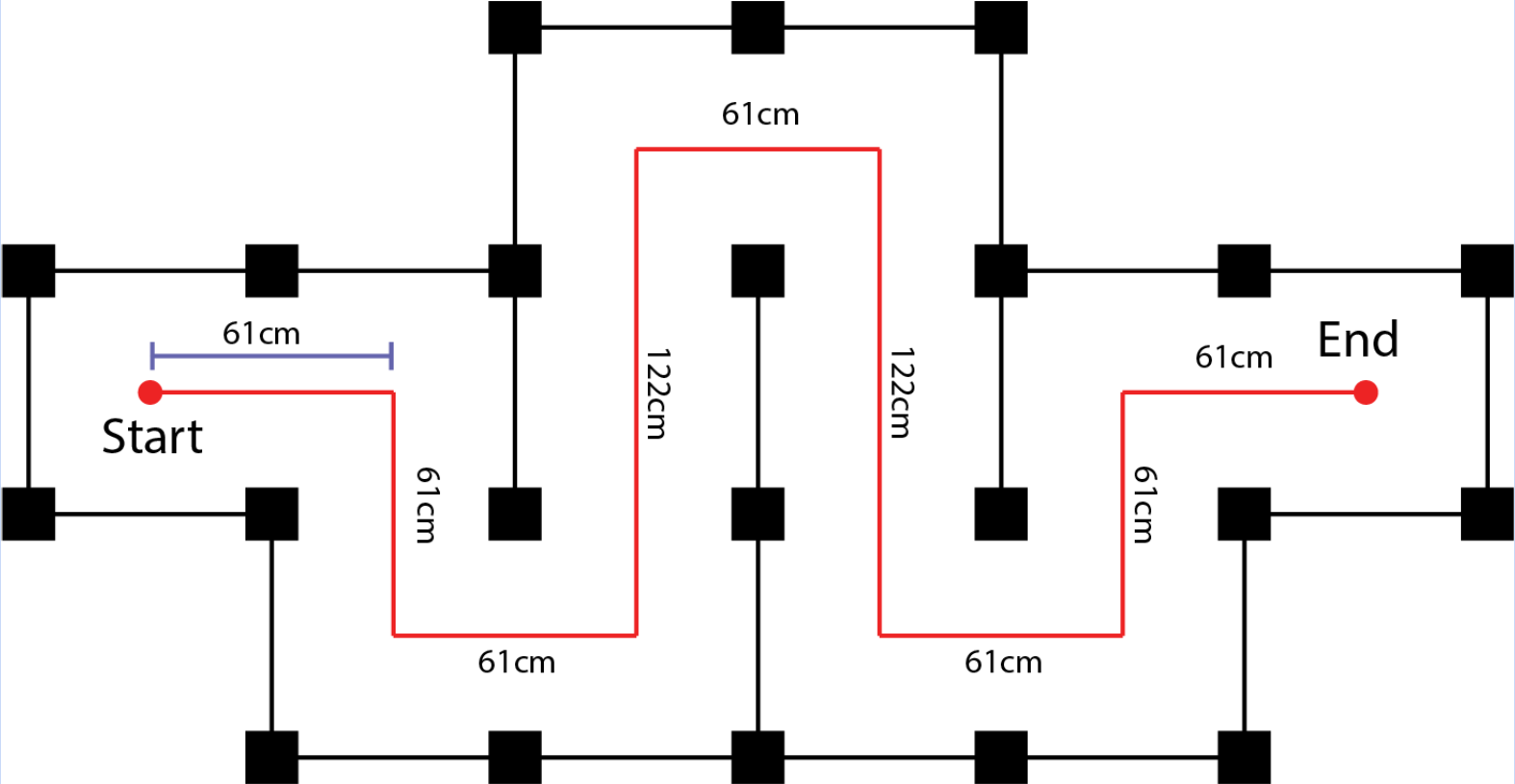

Demonstrate your SLAM system by mapping the maze used in Checkpoint 1. You may either manually drive the robot using teleop or use the motion controller from Checkpoint 1.

- Submit a screenshot of the generated map.

- Submit the map file itself.

- Submit a short description of your SLAM system, including how it works and any key observations.

- Submit your forklift design prototype, it can be in any form, such as a simple sketch, a CAD file, or other representation.